- Elham Tabassi’s article discusses the potential and challenges of generative AI, focusing on minimizing harms and maximizing benefits for society.

- The article highlights NIST’s efforts to develop guidelines and testing approaches to ensure AI trustworthiness and address societal implications, including misinformation and job insecurity.

- Tabassi emphasizes the importance of a human-centered approach to AI development, considering socio-technical impacts and ensuring that AI benefits everyone.

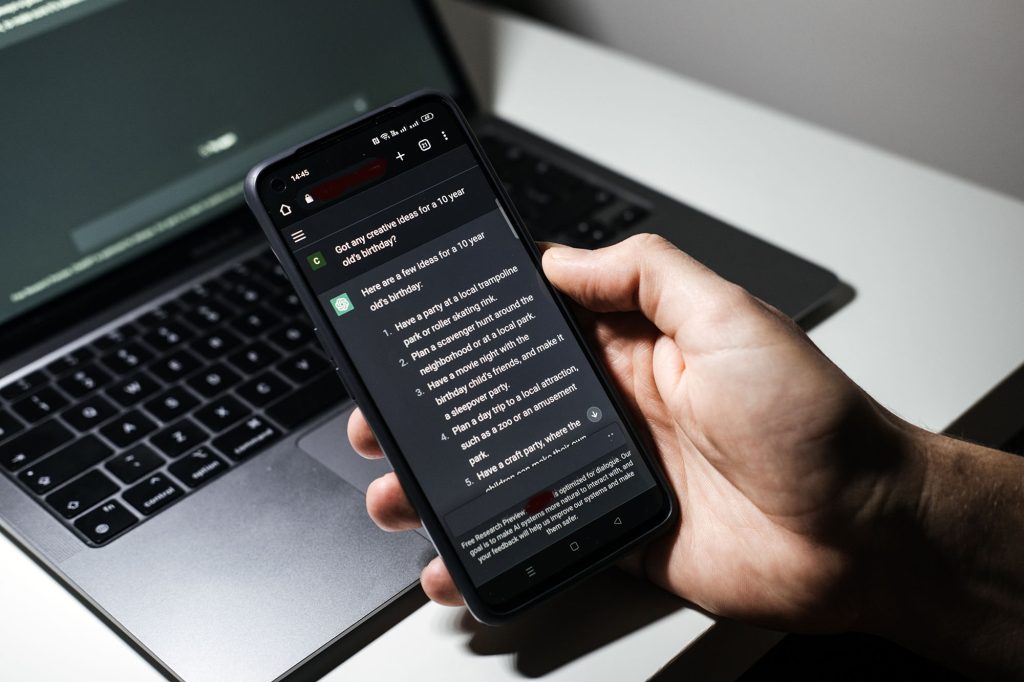

Elham Tabassi explores the complexities surrounding generative AI, such as ChatGPT, and its societal impact. The article begins by drawing parallels between the advent of social media and the emergence of generative AI tools. Just as social media brought connection and challenges, generative AI presents a mix of potential benefits and risks, including misinformation and job insecurity.

Tabassi notes that the National Institute of Standards and Technology (NIST) is actively working with the technology community to develop safeguards around all types of AI, not just text and image generation. The aim is to contemplate AI’s impact on society. She highlights her more than two decades of experience in machine learning and trustworthy AI, underscoring NIST’s commitment to addressing the potential negative consequences of AI while it’s still in its early stages.

A key focus of the article is NIST’s AI Risk Management Framework, developed in collaboration with various stakeholders. This framework aims to help technology companies consider the ramifications of their products. Tabassi emphasizes the importance of a socio-technical approach to testing AI systems, considering their functionality and potential effects on individuals, communities, and society.

The article also discusses NIST’s ongoing work on benchmarks and testing approaches for AI trustworthiness, including guidelines on language model testing, cyber incident reporting, and authenticity verification of digital content. For example, to combat the challenge of deepfakes, NIST is considering authenticity markings similar to social media account verifications.

Tabassi stresses that while AI technology will continue to evolve rapidly, comprehensive and flexible guidelines are necessary. She mentions that many technology companies have agreed to voluntarily abide by NIST’s guidelines, showing their commitment to creating trustworthy products.

In conclusion, Tabassi advocates for a measured approach to AI, emphasizing that building AI systems should be about technical capability and considering their impacts. The future of AI, she believes, is full of possibilities if it is developed with a human-centered focus, ensuring that it works for people and not the other way around.

Leave a Reply

You must be logged in to post a comment.